About Us

Empowering Data-Driven Success with expertise in Data Engineering

We are certified Data Engineer from AWS, GCP and hold the certification of AWS Certified Data Engineer - Associate and Professional Data Engineer. We can help your organization to quickly fullfil the requirements of designing complete ETL pipeline by following the best industry practice in Data Engineering.

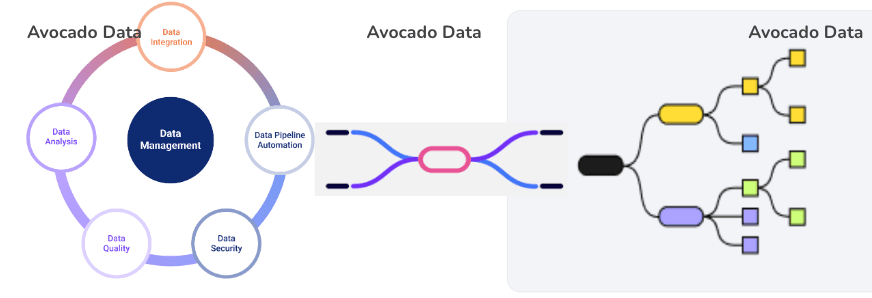

Our Expertise in Data Engineering:

As a specialized data engineer which specializes in managing the large scale Data Lake / data warehouse (BigQuery, Redshift), we are committed to delivering comprehensive solutions that not only unlock the potential of your data but also ensure its security and compliance. Our data governance and engineering expertise empowers organizations to make informed decisions while upholding the highest data protection standards.

- Scalable Data Pipelines:We design and build high-performance data pipelines using Apache Spark and Apache Flink frameworks, leveraging Scala or Python to efficiently handle large volumes of data, supporting both batch and stream processing.

- ETL/ELT Implementation: We manage all transformation configurations through YAML files for each table-level ingestion, allowing for more precise and actionable transformations on raw data. This method improves the overall usability of the data lake.

- Data Lake Optimization: We optimize data lake performance and cost-effectiveness by fine-tuning the storage settings of Parquet files. This ensures that the files are neither too small nor too large—avoiding excessive costs associated with ingestion or data retrieval using Athena or other SQL-based interfaces. For more information, check out our technical blogs.

- Cloud-Native Technologies: We utilize cloud-native technologies and serverless architectures for enhanced scalability and cost-efficiency. For example, we use AWS Lambda to sync metadata, even across different AWS accounts. To orchestrate workflows, we combine AWS Step Functions, AWS Lambda, and AWS EventBridge to automate data ingestion from various sources.

- Ingestion to Data Warehouse: We have highly configurable pipeline using the Apache Flank/Apache Spark in scala to read the data lake or other sources and ingestion in data warehouse like Redshift, BigQuery, Snowflake. Our ingestion pipeline is designed as a DIY and you to just provide the source and destination details and infra where the code will run like AWS EMR cluster, GCP dataflow/dataproc etc.

- Robust Data Management: We implement robust data governance frameworks to ensure data ownership, quality, and security. When connecting to the data catalog, we always use AWS Lake Formation to maintain data lake security and enable fine-grained access control, including row-level and column-level filtering.

- Robust Data Management: We integrate with centralized data catalogs to streamline data discovery and utilization. e.g. AWS Glue Data catalog and GCP Data Catalog

- Data Lineage and Impact Analysis: We provide tools and processes to track data lineage and assess the impact of changes in the existing infratructure like in databricks, AWS Glue pipeline etc.

By combining our data governance and data engineering expertise, we help organizations establish a solid foundation for data-driven initiatives while mitigating risks and ensuring compliance.

As we have various modular and parameterised codebase ready to be setup in your organization for data lake solution, we can help you to bootstrap your data lake solution in a matter of days.